Accurately predicting cancer subtypes is a cornerstone of modern oncology. These classifications guide prognosis, inform treatment decisions, and improve patient outcomes. Traditionally, this task relied on expert pathologists manually reviewing stained tissue slides—a time-consuming process prone to human variation.

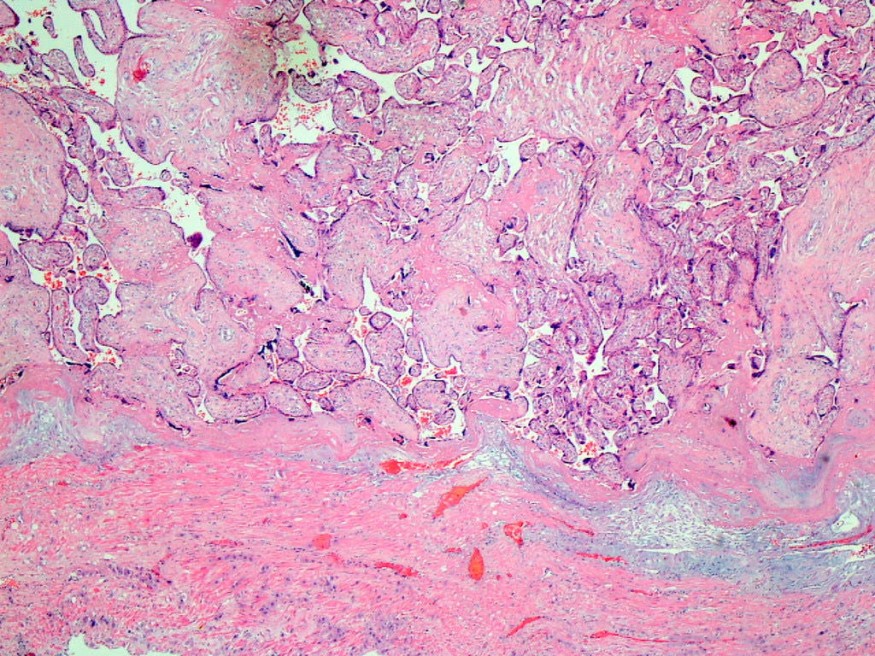

Today, AI models analyzing digital pathology images offer a faster, more scalable alternative that enhances diagnostic precision. Trained on large datasets of labeled histopathology images, these systems learn to detect subtle patterns associated with specific subtypes. Once deployed, a model can rapidly analyze a tissue image and output a predicted classification—potentially surfacing features that might otherwise go unnoticed.

But in practice, even highly accurate systems often operate as black boxes. Clinicians lack visibility into why a model made a particular prediction. Machine learning teams, too, are left without insight into what went wrong when the model fails. This lack of transparency can also complicate FDA approval, as regulators increasingly scrutinize how reliably AI systems generalize.

This disconnect between performance and understanding is one of the biggest blockers to deploying AI in high-stakes domains like pathology. Accuracy alone is not enough; trust requires transparency.

That's where Tessel comes in. Tessel collaborates with AI-driven pathology companies and life science providers to help them debug and interpret their machine learning models—providing a level of transparency essential for clinical confidence and scientific progress.

Rather than treating model outputs as opaque, Tessel's approach helps teams trace predictions back to the internal concepts the model relies on. These may correspond to specific tissue structures, morphological patterns, or abstract visual features synthesized during training. In many cases, these insights reveal whether the model is attending to meaningful biological signals—or overfitting to irrelevant visual artifacts.

Just as importantly, this analysis sheds light on data quality. By observing how models respond across samples, teams can spot mislabeled images, detect distributional shifts, and identify data that doesn't reflect intended diagnostic criteria—enabling faster, more systematic dataset cleanup and curation.

These insights have immediate, practical benefits. When models fail, machine learning teams often lack a clear next step. Without knowing what the model has actually learned, they're left retraining blindly. Tessel enables a more focused process: teams can pinpoint where generalization breaks down, uncover the root cause, and make targeted updates to the data or architecture—avoiding wasted effort and improving performance.

"We're working with teams that want to go beyond accuracy metrics," says Simon Reisch, co-founder and CTO of Tessel. "They want to understand what their models have actually learned—and whether that aligns with medical reality."

As AI becomes more deeply embedded in cancer diagnosis, explainability is no longer optional. It's not just about justifying past predictions—it's about knowing when a model's decision is grounded in statistically meaningful, biologically relevant concepts, and when it isn't. By uncovering the internal logic behind predictions—and surfacing the quality of the data that shapes them—Tessel is helping usher in a more trustworthy, transparent, and effective generation of AI-powered developer tools in life sciences.

© 2026 ScienceTimes.com All rights reserved. Do not reproduce without permission. The window to the world of Science Times.